An AI conveyor belt in NVIDIA Isaac Sim - Part 3 (Final)

Introduction

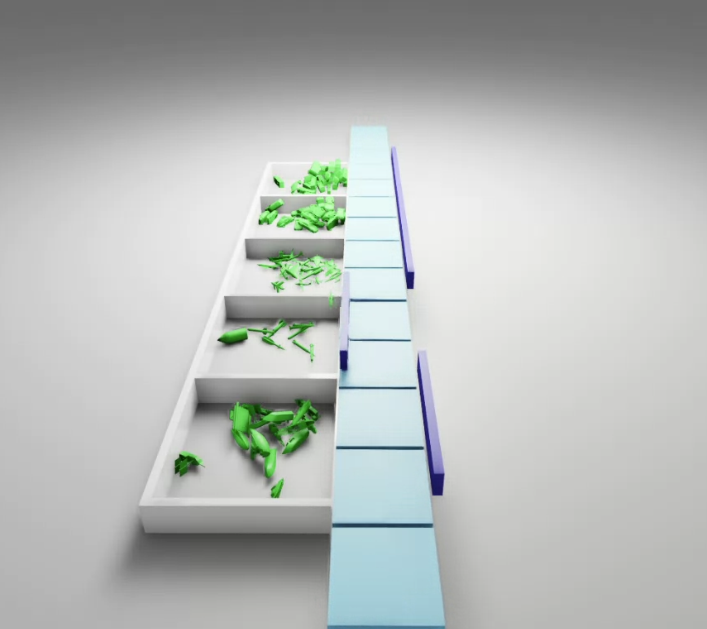

I’ve put it all together - a conveyor belt with sorting based on ML image classification in NVIDIA Isaac SIM:

What is this?

- A simulated conveyor belt using PhysX physics simulation in Isaac Sim (see Part 1).

- An ML classifier that has been trained on synthetic data (see Part 2).

- Robotic actuation for each object class based on the image classifier.

(If you just want to look at the code it is here).

Classifying each object and actuating the pusher

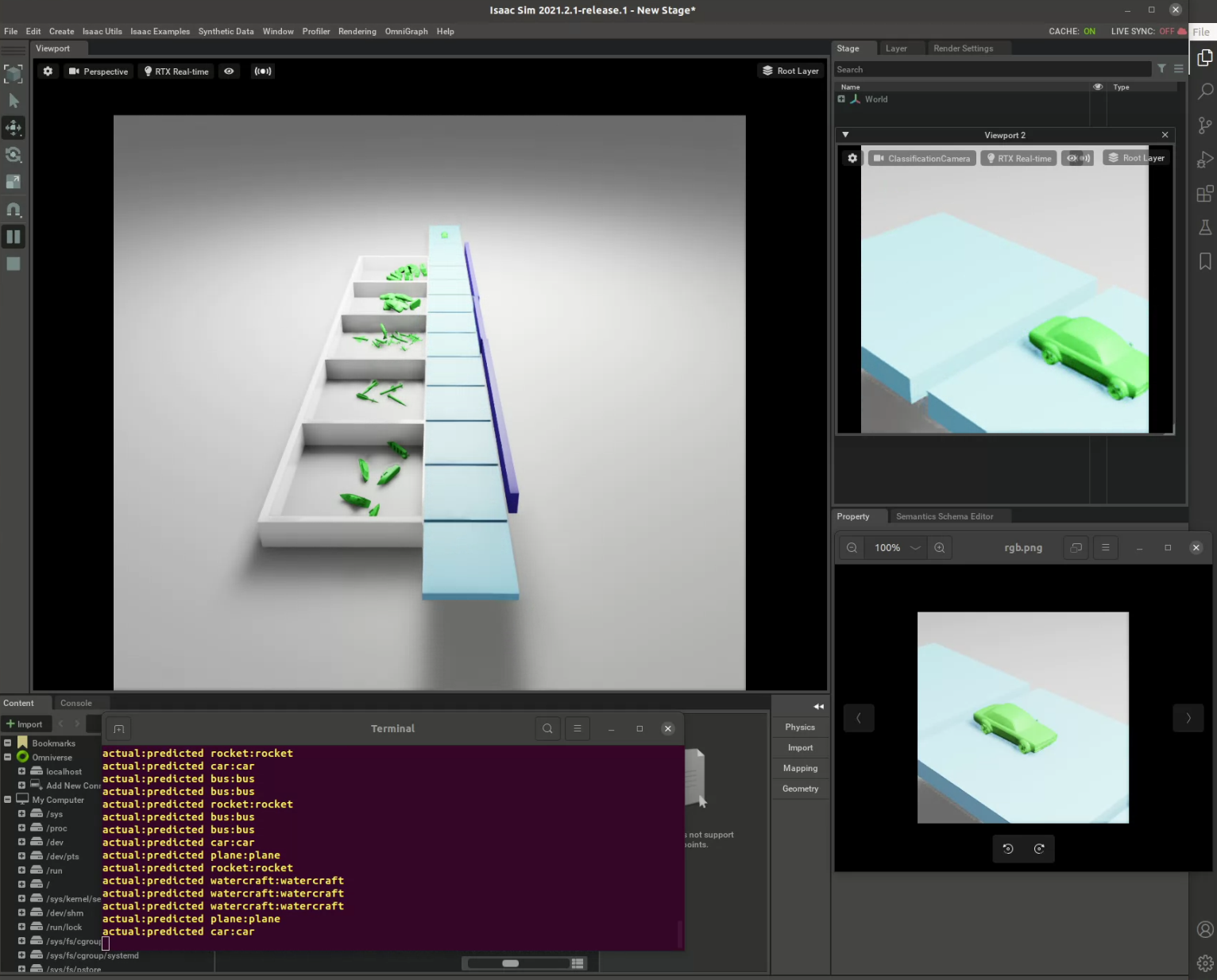

Here is the NVIDIA Omniverse app running the simulation showing the camera viewport (top right), and two debug windows showing the terminal (bottom left) and the RGB ground truth (bottom right):

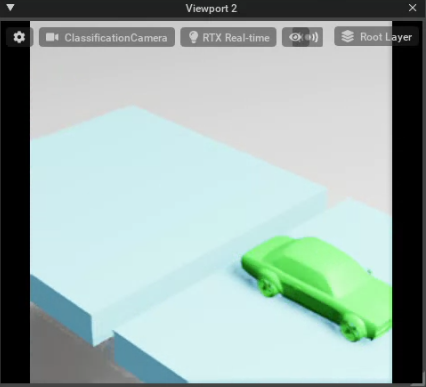

Let’s start with the camera viewport:

This viewport shows the current view of the camera we have created for classification. It is positioned in such a way to get decent information from the object. Initially I had it positioned right above but that lost a lot of detail (no shadows, no side details) and classification accuracy was not great. The image you see here is a bit different from the ones I used for training as we didn’t have the edges of the belt during synthetic data generation.

If I wanted to do another iteration I would recreate the synthetic data to be reflect this camera angle better, and include the “belt” sides.

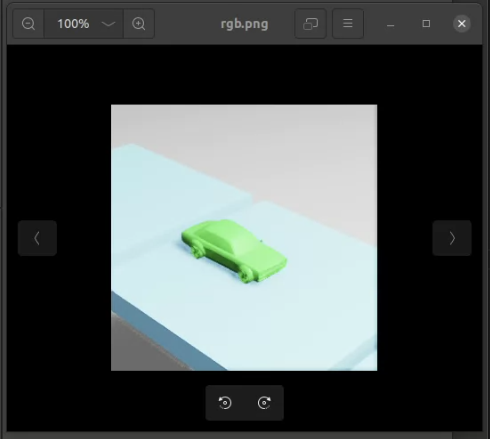

What then happens in code is that the RGB ground truth is captured from the viewport and used for classification. I also output it to file so I can see what’s happening during the simulation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

def capture_gt(self):

# Isaac Sim sensor returns RGBA image

rgb = sensors.get_rgb(self.classification_viewport)

# Discard alpha channel

rgb = rgb[:, :, :3]

# Prepare for classification (expects a batch)

input = np.expand_dims(rgb, axis=0)

# Prediction using Tensorflow model

prediction = self.model.predict(input)

self.widget_class = np.argmax(prediction)

print(f"actual:predicted "

f"{self.current_widget_category}:"

f"{self.categories[self.widget_class]}")

# Debug image to disk

image = Image.fromarray(rgb)

image.save("/tmp/rgb.png")

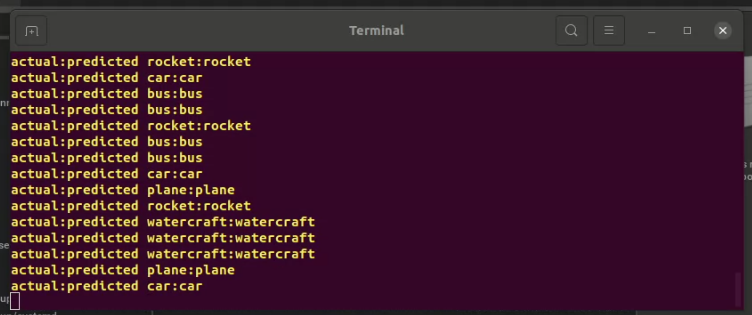

The debug terminal outputs the actual object class and the predicted class:

The RGB ground truth is captured to disk:

How did I train the model? I used the Keras example code from here:

https://keras.io/examples/vision/image_classification_from_scratch/

I’m not too concerned with accuracy of the model, my goal was to stitch it all together. I could have used a better model (e.g. Xception), better synthetic data, better validation data etc. I think the accuracy with the best validation dataset was 97% - good enough for my purposes.

Once the object class is known it’s pretty simple to set the target position of the joint:

1

self.pushers[self.widget_class].CreateTargetPositionAttr(120.0)

Lessons learnt

The goal of this experiment was to learn Isaac Sim, apply some deep learning I have studied recently and learn some synthetic data generation techniques. Here are some things I learned:

- I don’t think creating the scene completely in Python is productive. There’s a reason engineers use CAD tools to design objects. There is an advantage of having a completely parametric design but for anything of less than small complexity it’s probably not worth it. If I was going to develop a “proper” synthetic data environment I would combine parametric model generation, or parametric object placement, with design in a high-level 3D modelling environment. Creating joints, and specifically joint positions that depend on multiple objects is a pain in code. For this scene, I would create the belt as a USD asset, and each pusher arm + box as an asset and place them appropriately.

- The physics was more difficult than I expected and required a bunch of tinkering. Things like mass, damping, etc. requires some effect (this is to be expected in hindsight).

- Isaac Sim is complex, especially from the Python side. There is often multiple ways of doing things (e.g. using the Omniverse higher-level libraries vs USD libraries). There are different ways of sizing things (like cubes) using the different APIs. Physics uses the lower-level APIs. Etc. That being said the examples and documentation is really good, especially the tutorials. And I haven’t encountered any bugs, which has not been the case with similar complex environments.

You can find the full code [here](If you just want to look at the code it is here.

What’s next

Not exactly sure, but I am interested in using Isaac Sim as a synthetic environment for iOS Reality Kit, so I might try something in that direction.